Scientists from Nanyang Technological University, Singapore (NTU Singapore), in collaboration with clinicians at Tan Tock Seng Hospital (TTSH) in Singapore have developed a novel method that uses artificial intelligence (AI) to screen for glaucoma, a group of eye diseases that can cause vision loss and blindness through damage to the optic nerve at the back of the eye.

The AI-enabled method uses algorithms to differentiate optic nerves with glaucoma from those that are normal by analyzing stereo fundus images—multi-angle 2D images of the retina that are combined to form a 3D image.

When tested on stereo fundus images from TTSH patients undergoing expert examination, the AI method yielded an accuracy of 97 percent in diagnosing glaucoma.

Glaucoma is often called “the silent thief of sight” as it is usually asymptomatic until latter stages, when prognosis is poor. It is the principal cause of irreversible blindness worldwide and, in tandem with the rapid growth of the aging population, is expected to affect 111.8 million people globally by 2040, up from 76 million in 2020.

The automated glaucoma diagnosis method developed by NTU and TTSH, described in a study published in the peer-reviewed scientific journal Methods in June 2021, could potentially be used in less developed areas where patients lack access to ophthalmologists, said the scientists.

The study exemplifies NTU’s research efforts as part of its 2025 strategic plan to be at the forefront of tackling four of humanity’s grand challenges, one of which is to respond to the needs and challenges of healthy living and aging.

Dr. Leonard Yip, co-author of the study and Head of Glaucoma Service at the National Healthcare Group (NHG) Eye Institute, TTSH, said: “Many glaucoma patients remain undiagnosed in the community, and in developing countries like India, the percentage of undiagnosed cases may be well over 90 percent. While cases are usually picked up during routine eye checks, population-based screening is challenging due to the specialized and expensive equipment or trained experts required. The process of manually inspecting individual retinal images is also time-consuming and depends on subjective evaluation by experts. Our method of using AI, in contrast, could potentially be more efficient and economical.”

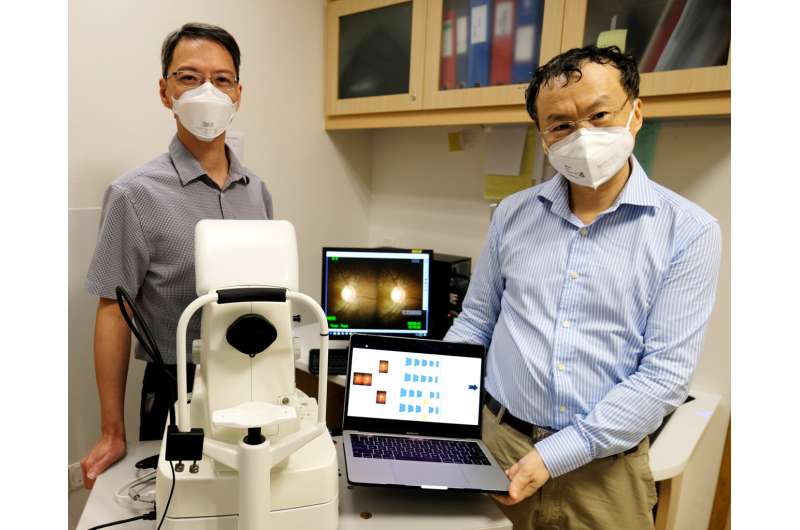

Associate Professor Wang Lipo from the NTU School of Electrical and Electronic Engineering and lead author of the study said: “Through a combination of machine learning techniques, our team has developed a screening model that can diagnose glaucoma from fundus images, removing the need for ophthalmologists to take various clinical measurements (such as internal eye pressure) for diagnosis. The ease of use of our robust automated glaucoma diagnosis approach means that any healthcare practitioner could make use of the system to help in glaucoma screening. This will be especially helpful in geographical areas with less access to ophthalmologists.”

The team is now testing their algorithms on a larger dataset of patient fundus images taken at TTSH. They are also looking at how the software can be ported to a mobile phone application so that, when used in conjunction with a fundus camera or lens adaptor for mobile phones, it could be a feasible glaucoma screening tool in the field.

How it works

The automated glaucoma diagnostic system developed by the team at NTU and TTSH uses a set of algorithms to analyze stereo fundus images taken as pairs by two cameras from different viewpoints. These 2D ‘left’ and ‘right’ images of the fundus help to form a 3D view when combined.

Using two images ensures that if one image is poor quality, the other image can usually compensate and the system can maintain its accurate performance, said the scientists.

The set of algorithms is made up of two components: a deep convolutional neural network and an attention-guided network. The former mimics the human brain’s biological process to adapt to learning new things, while the attention-guided network imitates the brain’s manner of selectively focusing on a few relevant features—in this case, the optic nerve head region in the fundus images.

The outputs from these two components are then fused together to generate the final prediction result.

To test their algorithms, the scientists first reduced the resolution of 282 fundus images (70 glaucoma cases and 212 healthy cases) taken of TTSH patients during their eye screening, before training the algorithms with 70 percent of the dataset.

To generate more training samples, the scientists also applied image augmentation—a technique that involves applying random but realistic transformations, such as image rotation—to increase the diversity of the dataset used to train the algorithms, which enhances the algorithms’ classification accuracy.

AI-enabled screening method achieves 97% accuracy

The joint research team then tested their screening method on the remaining 30 percent of the patient images and found that it had an accuracy of 97 percent in correctly identifying glaucoma cases, and a sensitivity (the fraction of cases correctly classified among all positive glaucoma cases) of 95 percent—higher than other state-of-the-art deep learning based-methods also trialed during the study, which yielded sensitivities ranging from 69 to 89 percent.

The scientists also found that using a pair of stereo fundus images improved the sensitivity of their screening system. When single fundus images were used, the algorithms had a lower sensitivity of 85 to 86 percent.

NTU Assoc Prof Wang said: “Excellent and stable performance is especially important in medical diagnosis, and this study has shown that our model of combining deep convolutional neural network with attention mechanisms has resulted in a reliable and efficient AI-enabled screening approach for glaucoma. Using both left and right fundus images in a stereo pair to perform glaucoma screening has also helped to significantly improve the robustness of the screening model. Going forward, we are looking to further finetune our algorithms and validate our AI approach’s clinical use by further testing them on more patient images.”

Source: Read Full Article